prediction_vector¶

Module: resources.dataset_defs.prediction_vector¶

Load and store data sets containing Prediction Vectors

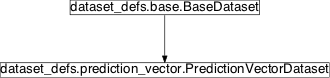

Inheritance diagram for pySPACE.resources.dataset_defs.prediction_vector:

PredictionVectorDataset¶

-

class

pySPACE.resources.dataset_defs.prediction_vector.PredictionVectorDataset(dataset_md=None, num_predictors=1, **kwargs)[source]¶ Bases:

pySPACE.resources.dataset_defs.base.BaseDatasetPrediction Vector dataset class

The class at hand contains the methods needed to work with the datasets consisting of

PredictionVectorDataset- The following data formats are currently supported:

- *.csv - with or without a header column

- *.pickle

TODO: Add functionality for the *.arff format.

Note

The implementation of the current dataset is adapted from the

FeatureVectorDatasetandTimeSeriesDataset. For a more thorough documentation, we refer the reader to the 2 datasets mentioned above.Parameters

dataset_md: Dictionary containing meta data for the collection to be loaded. Out of these parameters, the most important one is the number of predictors since it how the Prediction Vectors will be generated. num_predictors: The number of predictors that each PredictionVector contains. This parameter is important for determining the dimensionality of the PredictionVector. Special CSV Parameters

delimiter: Needed only when dealing with *.csv files. The default is , is used or the tabulator ` . When storing, `, is used.

(recommended, default: ‘,’)

label_column: Column containing the true label of the data point

Normally this column looses its heading. when saving the csv file, the default, -1, is used.

(recommended, default: -1)

ignored_columns: List of numbers containing the numbers of irrelevant columns, e.g., [1,2,8,42]

After the data is loaded, this parameter becomes obsolete.

(optional, default: [])

ignored_rows: Replace row in description of ‘ignored_columns’

(optional, default: [])

Author: Andrei Ignat (andrei_cristian.ignat@dfki.de) Created: 2014/10/15 Class Components Summary

add_sample(sample, label, train[, split, run])Add a prediction vector to this collection dump(result_path, name)Dumps this collection into a file get_data(run_nr, split_nr, train_test)Load the data from a prediction file store(result_dir[, s_format])store the collection in result_dir -

__init__(dataset_md=None, num_predictors=1, **kwargs)[source]¶ Read out the data from the given collection