feature_vector¶

Module: resources.dataset_defs.feature_vector¶

Load and store data sets containing Feature Vectors

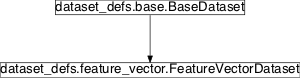

Inheritance diagram for pySPACE.resources.dataset_defs.feature_vector:

FeatureVectorDataset¶

-

class

pySPACE.resources.dataset_defs.feature_vector.FeatureVectorDataset(dataset_md=None, classes_names=[], feature_names=None, num_features=None, **kwargs)[source]¶ Bases:

pySPACE.resources.dataset_defs.base.BaseDatasetFeature vector dataset class

This class is most importantly for loading and storing

FeatureVectorto the file system. You can load it using afeature_vector_sourcenode. It can be saved, using afeature_vector_sinknode in aNodeChainOperationThe constructor expects the argument dataset_md that contains a dictionary with all the meta data. It is normally loaded from the metadata.yaml file.

It is able to load csv-Files, arff-files and pickle files, where one file is always responsible for one training or test set. The name conventions are the same as described in

TimeSeriesDataset. It is important that a metadata.yaml file exists, giving all the relevant information of the data set, especially the storage format, which can be pickle, arff, csv or csvUnnamed. The last format is only for loading data without heading, and with the labels being not in the last column.pickle-files

See

TimeSeriesDatasetfor name conventions (in the tutorial).arff-files

http://weka.wikispaces.com/ARFF

This format was introduced to connect pySPACE with weka. So when using weka, you need to choose this file format as the parameter storage format in the preprocessing operation’s spec file.

CSV (comma separated values)

These are tables in a simple text format. Therefore each column is separated with a comma and each row with a new line. Normally the first line gives the feature names and one row is giving the class labels. Therefore several parameters need to be specified in the metadata.yaml file.

If no collection meta data is available for the input data, the ‘metadata.yaml’ file can be generated with

md_creator. Please consider also some important parameters, described in the get_data function.Preferably the labels are in the last column. This corresponds to label_column being -1 in the metadata.yaml file.

Special CSV Parameters

label_column: Column containing the labels

Normally this column looses its heading. when saving the csv file, the default, -1, is used.

(recommended, default: -1)

ignored_columns: List of numbers containing the numbers of irrelevant columns, e.g., [1,2,8,42]

After the data is loaded, this parameter becomes obsolete.

(optional, default: [])

ignored_rows: Replace row in description of ‘ignored_columns’

(optional, default: [])

delimiter: Symbol which separates the csv entries

Typically , is used or the tabulator ` . When storing, `, is used.

(recommended, default: ‘,’)

Parameters

dataset_md: dictionary containing meta data for the collection to be loaded The following 3 Parameters contain standard information for a feature vector data set. Normally they are not yet needed (used), because a dataset_md is given and real data is loaded, and so this information could be loaded from the data. Nevertheless these are important entries, which should be found in each dataset_md, giving information about the data set.

classes_names: list of the used class labels

feature_names: list of the feature names

The feature names are either determined during the loading of the data, if available in the respective storage_format, or they are later on set with a default string (e.g., feature_0_0.000sec).

num_features: number of the given features

Class Components Summary

add_sample(sample, label, train[, split, run])Add a sample to this collection dump(result_path, name)Dumps this collection into a file. get_data(run_nr, split_nr, train_test)Loads the data from the feature file of the current input collection depending on the storage_format. store(result_dir[, s_format])Stores this collection in the directory result_dir. -

__init__(dataset_md=None, classes_names=[], feature_names=None, num_features=None, **kwargs)[source]¶ Read out the data from the given collection

Note

main loading concept copied from time series collection check needed if code can be sent to upper class

-

add_sample(sample, label, train, split=0, run=0)[source]¶ Add a sample to this collection

Adds the sample sample along with its class label label to this collection.

Parameters

sample: The respective data sample

label: The label of the data sample

train: If train, this sample has already been used for training

split: The number of the split this sample belongs to. (optional, default: 0)

run: The run number this sample belongs to

(optional, default: 0)

-

dump(result_path, name)[source]¶ Dumps this collection into a file.

Dumps (i.e. pickle) this collection object into a bz2 compressed file. In contrast to store this method stores the whole collection in a file. No meta data are stored in a YAML file etc.

- The method expects the following parameters:

- result_path The path to the directory in which the pickle file will be written.

- name The name of the pickle file

-

get_data(run_nr, split_nr, train_test)[source]¶ Loads the data from the feature file of the current input collection depending on the storage_format. Separates the actual vectors from the names and returns both as lists.

The method expects the following

Parameters

feature_file: the file of feature vectors to be loaded storage_format: One of the first components in [‘arff’, ‘real’], [‘csv’, ‘real’], [‘csvUnnamed’, ‘real’] or . Format in which the feature_file was saved. Information need to be present in meta data. For arff and pickle files documentation see to the class description (docstring). Pickle format files do not need any special loading because they already have the perfect format.

CSV

If no collection meta data is available for the input data, the ‘metadata.yaml’ file can be generated with

pySPACE.run.node_chain_scripts.md_creator.If you created the csv file with pySPACE, you automatically have the standard csv format with the feature names in the first row and the labels in the last column.

If you have a csv tabular without headings, you have the csvUnnamed format, and in your ‘label_column’ column, specified in your spec file, the labels can be found.

Note

main loading concept copied from time series collection check needed if code can be sent to upper class

-

store(result_dir, s_format=['pickle', 'real'])[source]¶ Stores this collection in the directory result_dir.

In contrast to dump this method stores the collection not in a single file but as a whole directory structure with meta information etc. The data sets are stored separately for each run, split, train/test combination.

- The method expects the following parameters:

result_dir The directory in which the collection will be stored

name The prefix of the file names in which the individual data sets are stored. The actual file names are determined by appending suffixes that encode run, split, train/test information. Defaults to “features”.

- format A list with information about the format in which the

actual data sets should be stored. The first entry specifies the file format. If it is “arff” the second entry specifies the attribute format.

Examples: [“arff”, “real”], [“arff”, “{0,1}”]

To store the data in comma separated values, use [“csv”, “real”].

(optional, default: [“pickle”, “real”])

-