performance_result¶

Module: resources.dataset_defs.performance_result¶

Tabular listing data sets, parameters and a huge number of performance metrics

Store and load the performance results of an operation from a csv file, select subsets of this results or for create various kinds of plots

- Special Static Methods

merge_performance_results: Merge result*.csv files when classification fails or is aborted. repair_csv: Wrapper function for whole csv repair process when classification fails or is aborted.

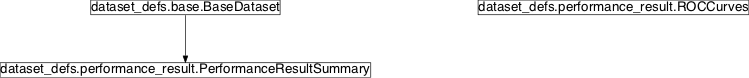

Inheritance diagram for pySPACE.resources.dataset_defs.performance_result:

Class Summary¶

PerformanceResultSummary([data, dataset_md, ...]) |

Classification performance results summary |

ROCCurves(base_path) |

Class for plotting ROC curves |

Classes¶

PerformanceResultSummary¶

-

class

pySPACE.resources.dataset_defs.performance_result.PerformanceResultSummary(data=None, dataset_md=None, dataset_dir=None, csv_filename=None, **kwargs)[source]¶ Bases:

pySPACE.resources.dataset_defs.base.BaseDatasetClassification performance results summary

For the identifiers some syntax rules hold to make some distinction:

- Parameters/Variables start and end with __. These identifiers define the processing differences of the entries. Altogether the corresponding values build a unique key of each row.

- Normal metrics start with a Big letter and continue normally with small letters except AUC.

- Meta metrics like training metrics, LOO metrics or soft metrics start with small letters defining the category followed by a - and continue with the detailed metric name.

- Meta information like chosen optimal parameters can be separated from metrics and variables using ~~ at beginning and end of the information name.

This class can load a result tabular (namely the results.csv file) using the factory method

from_csv().Furthermore, the method

project_onto()allows to select a subset of the result collection where a parameter takes on a certain value.The class contains various methods for plotting the loaded results. These functions are used by the analysis operation and by the interactive analysis GUI.

Mainly result collections are loaded for

comp_analysis,analysisand as best alternative with theperformance_results_analysis.They can be build e.g. with the

classification_performance_sinknodes, with MMLF or withWekaClassificationOperation.The metrics as result of

classification_performance_sinknodes are calculated in themetricdataset module.The class constructor expects the following arguments:

data: A dictionary that contains a mapping from an attribute (e.g. accuracy) to a list of values taken by this attribute. An entry is the entirety of all i-th values over all dict-values

tmp_pathlist: List of files to be deleted after successful storing

When constructed via from_multiple_csv all included csv files can be deleted after the collection is stored. Therefore the parameter delete has to be active.

(optional, default:None)

delete: Switch for deleting files in tmp_pathlist after collection is stored.

(optional, default: False)

Author: Mario M. Krell (mario.krell@dfki.de) Class Components Summary

dict2tuple(dictionary)Return dictionary values sorted by key names from_csv(csv_file_path)Loading data from the csv file located under csv_file_path from_multiple_csv(input_dir)All csv files in the only function parameter ‘input_dir’ are get_gui_metrics()Returns the columns in data that correspond to metrics for visualization. get_gui_variables()Returns the column headings that correspond to ‘variables’ to be visualized in the Gui get_indexed_data()Take the variables and create a dictionary with variable entry tuples as keys get_metrics()Returns the columns in data that are real metrics get_nominal_parameters(parameters)Returns a generator over the nominal parameters in parameters get_numeric_parameters(parameters)Returns a generator over the numeric parameters in parameters get_parameter_values(parameter)Returns the values that parameter takes on in the data get_performance_entry(search_dict)Get the line in the data, which corresponds to the search_dict get_variables()Variables are marked with ‘__’ merge_performance_results(input_dir[, ...])Merge result*.csv files when classification fails or is aborted. merge_traces(input_dir)Merge and store the classification trace files in directory tree plot_histogram(axes, metric, ...[, average_runs])Plots a histogram of the values the given metric takes on in data plot_nominal(axes, x_key, y_key)Creates a boxplot of the y_key for the given nominal parameter x_key. plot_nominal_vs_nominal(axes, nominal_key1, ...)Plot comparison of several different values of two nominal parameters plot_numeric(axes, x_key, y_key[, conditions])Creates a plot of the y_key for the given numeric parameter x_key. plot_numeric_vs_nominal(axes, numeric_key, ...)Plot for comparison of several different values of a nominal parameter with mean and standard error plot_numeric_vs_numeric(axes, axis_keys, ...)Contour plot of the value_key for the two numeric parameters axis_keys. project_onto(proj_parameter, proj_values)Project result collection onto a subset that fulfills all criteria repair_csv(path[, num_splits, default_dict, ...])Wrapper function for whole csv repair process when classification fails or is aborted. store(result_dir[, name, s_format, main_metric])Stores this collection in the directory result_dir. transfer_Key_Dataset_to_parameters(data_dict)transform()Fix format problems like floats in metric columns and tuples instead of column lists translate_weka_key_schemes(data_dict)Data dict is initialized as ‘defaultdict(list)’ and so the append function will work on non existing keys. -

static

from_multiple_csv(input_dir)[source]¶ All csv files in the only function parameter ‘input_dir’ are combined to just one result collection

Deleting of files will be done in the store method, after the result is stored successfully.

-

transform()[source]¶ Fix format problems like floats in metric columns and tuples instead of column lists

-

static

merge_traces(input_dir)[source]¶ Merge and store the classification trace files in directory tree

The collected results are stored in a common file in the input_dir.

-

static

translate_weka_key_schemes(data_dict)[source]¶ Data dict is initialized as ‘defaultdict(list)’ and so the append function will work on non existing keys.

-

static

merge_performance_results(input_dir, delete_files=False)[source]¶ Merge result*.csv files when classification fails or is aborted.

Use function with the pathname where the csv-files are stored. E.g., merge_performance_results(‘/Users/seeland/collections/20100812_11_18_58’)

Parameters

input_dir: Contains a string with the path where csv files are stored.

delete_files: controls if the csv-files will be removed after merging has finished

(optional, default: False)

Author: Mario Krell Created: 2011/09/21

-

static

repair_csv(path, num_splits=None, default_dict=None, delete_files=True)[source]¶ - Wrapper function for whole csv repair process when classification fails

- or is aborted.

This function performs merge_performance_results, reporting and reconstruction of missing conditions, and a final merge. As a result two files are written: results.csv and repaired_results.csv to the path specified.

- Parameters

path: String containing the path where the classification results are stored. This path is also used for storing the resulting csv files.

num_splits: Number of splits used for classification. If not specified this information is read out from the csv file of the merge_performance_results procedure.

(optional, default: None)

default_dict: A dictionary specifying default values for missing conditions. This dictionary can e.g. be constructed using empty_dict(csv_dict) and subsequent modification, e.g. default_dict[‘Metric’].append(0). This parameter is used in reconstruct_failures.

(optional, default: None)

delete_files: Controls if unnecessary files are deleted by merge_performance_results and check_op_libSVM.

(optional, default: True)

Author: Mario Krell, Sirko Straube Created: 2010/11/09

-

store(result_dir, name='results', s_format='csv', main_metric='Balanced_accuracy')[source]¶ Stores this collection in the directory result_dir.

In contrast to dump this method stores the collection not in a single file but as a whole directory structure with meta information etc.

Parameters

result_dir: The directory in which the collection will be stored

name: The name of the file in which the result file is stored.

(optional, default: ‘results’)

s_format: The format in which the actual data sets should be stored.

(optional, default: ‘csv’)

main_metric: Name of the metric used for the shortened stored file. If no metric is given, no shortened version is stored.

(optional, default: ‘Balanced_accuracy’)

-

project_onto(proj_parameter, proj_values)[source]¶ Project result collection onto a subset that fulfills all criteria

Project the result collection onto the rows where the parameter proj_parameter takes on the value proj_value.

-

get_gui_metrics()[source]¶ Returns the columns in data that correspond to metrics for visualization.

This excludes ‘Key_Dataset’ and gui variables of the tabular,

-

get_gui_variables()[source]¶ Returns the column headings that correspond to ‘variables’ to be visualized in the Gui

-

get_variables()[source]¶ Variables are marked with ‘__’

Everything else are metrics, meta metrics, or processing information.

-

get_nominal_parameters(parameters)[source]¶ Returns a generator over the nominal parameters in parameters

Note

Nearly same code as in get_numeric_parameters. Changes in this method should be done also to this method.

-

get_numeric_parameters(parameters)[source]¶ Returns a generator over the numeric parameters in parameters

Note

Nearly same code as in get_nominal_parameters. Changes in this method should be done also to this method.

-

get_indexed_data()[source]¶ Take the variables and create a dictionary with variable entry tuples as keys

-

get_performance_entry(search_dict)[source]¶ Get the line in the data, which corresponds to the search_dict

-

plot_numeric(axes, x_key, y_key, conditions=[])[source]¶ Creates a plot of the y_key for the given numeric parameter x_key.

A function that allows to create a plot that visualizes the effect of differing one variable onto a second one (e.g. the effect of differing the number of features onto the accuracy).

Expected arguments

axes: The axes into which the plot is written x_key: The key of the dictionary whose values should be used as values for the x-axis (the independent variable) y_key: The key of the dictionary whose values should be used as values for the y-axis, i.e. the dependent variable conditions: A list of functions that need to be fulfilled in order to use one entry in the plot. Each function has to take two arguments: The data dictionary containing all entries and the index of the entry that should be checked. Each condition must return a boolean value.

-

plot_numeric_vs_numeric(axes, axis_keys, value_key, scatter=True)[source]¶ Contour plot of the value_key for the two numeric parameters axis_keys.

A function that allows to create a contour plot that visualizes the effect of differing two variables on a third one (e.g. the effect of differing the lower and upper cutoff frequency of a bandpass filter onto the accuracy).

Parameters

axes: The axes into which the plot is written

axis_keys: The two keys of the dictionary that are assumed to have an effect on a third variable (the dependent variable)

value_key: The dependent variables whose values determine the color of the contour plot

scatter: Plot nearly invisible dots behind the real data points.

(optional, default: True)

-

plot_numeric_vs_nominal(axes, numeric_key, nominal_key, value_key, dependent_BA_plot=False, relative_plot=False, minimal=False)[source]¶ Plot for comparison of several different values of a nominal parameter with mean and standard error

A function that allows to create a plot that visualizes the effect of varying one numeric parameter onto the performance for several different values of a nominal parameter.

Parameters

axes: The axes into which the plot is written

numeric_key: The numeric parameter whose effect (together with the nominal parameter) onto the dependent variable should be investigated.

nominal_key: The nominal parameter whose effect (together with the numeric parameter) onto the dependent variable should be investigated.

value_key: The dependent variable whose values determine the color of the contour plot

dependent_BA_plot: If the value_key contains time or iterations and this variable is True, the value is replaced by Balanced_Accuracy and the nominal_key by the value_key. The point in the graph are constructed by averaging over the old nominal parameter.

(optional, default: False)

relative_plot: The first nominal_key value (alphabetic ordering) is chosen and the other parameters are averaged relative to this parameter, to show by which factor they change the metric. Therefore a clean tabular is needed with only relevant variables correctly named and where each parameter is compared with the other. Relative plots and dependent_BA plots can be combined.

(optional, default: False)

minimal: Do not plot labels and legends.

(optional, default: False)

-

plot_nominal(axes, x_key, y_key)[source]¶ Creates a boxplot of the y_key for the given nominal parameter x_key.

A function that allows to create a plot that visualizes the effect of differing one nominal variable onto a second one (e.g. the effect of differing the classifier onto the accuracy).

Expected arguments

axes: The axes into which the plot is written x_key: The key of the dictionary whose values should be used as values for the x-axis (the independent variables) y_key: The key of the dictionary whose values should be used as values for the y-axis, i.e. the dependent variable

-

plot_nominal_vs_nominal(axes, nominal_key1, nominal_key2, value_key)[source]¶ Plot comparison of several different values of two nominal parameters

A function that allows to create a plot that visualizes the effect of varying one nominal parameter onto the performance for several different values of another nominal parameter.

Expected arguments

axes: The axes into which the plot is written nominal_key1: The name of the first nominal parameter whose effect shall be investigated. This parameter determines the x-axis. nominal_key2: The second nominal parameter. This parameter will be represented by a different color per value. value_key: The name of the dependent variable whose values determines the y-values in the plot.

-

plot_histogram(axes, metric, numeric_parameters, nominal_parameters, average_runs=True)[source]¶ Plots a histogram of the values the given metric takes on in data

Plots histogram for metric in which each parameter combination from numeric_parameters and nominal_parameters corresponds to one value (if average_runs == True) or each run corresponds to one value (if average_runs == False). The plot is written into axes.

ROCCurves¶

-

class

pySPACE.resources.dataset_defs.performance_result.ROCCurves(base_path)[source]¶ Bases:

objectClass for plotting ROC curves

Class Components Summary

_load_all_curves(dir)Load all ROC curves located in the persistency dirs below dir _project_onto_subset(roc_curves, constraints)Retain only roc_curves that fulfill the given constraints. is_empty()Return whether there are no loaded ROC curves plot(axis, selected_variable, ...[, fpcost, ...])plot_all(axis, projection_parameter[, ...])Plot all loaded ROC curves after projecting onto subset. -

__weakref__¶ list of weak references to the object (if defined)

-

plot(axis, selected_variable, projection_parameter, fpcost=1.0, fncost=1.0, collection=None)[source]¶

-