time_series¶

Module: resources.dataset_defs.time_series¶

Load and store data of the type pySPACE.resources.data_types.time_series

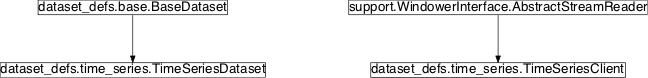

Inheritance diagram for pySPACE.resources.dataset_defs.time_series:

Class Summary¶

TimeSeriesDataset([dataset_md, sort_string]) |

Loading and storing a time series dataset |

TimeSeriesClient(ts_stream, \*\*kwargs) |

TimeSeries stream client for TimeSeries |

Classes¶

TimeSeriesDataset¶

-

class

pySPACE.resources.dataset_defs.time_series.TimeSeriesDataset(dataset_md=None, sort_string=None, **kwargs)[source]¶ Bases:

pySPACE.resources.dataset_defs.base.BaseDatasetLoading and storing a time series dataset

This class encapsulate most relevant code for dealing with time series datasets, most importantly for loading and storing them to the file system.

These datasets consist of

time_seriesobjects. They can be loaded with atime_series_sourceand saved with atime_series_sinknode in aNodeChainOperation.The standard storage_format is ‘pickle’, but it is also possible to load Matlab format (‘mat’) or BrainComputerInterface-competition data. For that,

storage_formathas to be set in the format bci_comp_[competition number]_[dataset number] in the metadata.yaml file. For example, bci_comp_2_4 means loading of time series from BCI Competition II (2003), dataset IV. Currently, the following datasets can be loaded:- BCI Competition II, dataset IV: self-paced key typing (left vs. right)

- BCI Competition III, dataset II: P300 speller paradigm, training data

See http://www.bbci.de/competition/ for further information.

For saving the data, other formats are currently supported but not yet for loading the data. This issue can be handled by processing the data with a node chain operation which transforms the data into feature vectors and use the respective storing and loading functionality, e.g., with csv and arff files. There is also a node for transforming feature vectors back to TimeSeries objects.

Parameters

dataset_md: A dictionary with all the meta data.

(optional, default: None)

sort_string: A lambda function string that is evaluated before the data is stored.

(optional, default: None)

- Known issues

- The BCI Competition III dataset II should be actually loaded as a streaming dataset to enable different possibilities for windowing. Segment ends (i.e., where a new letter starts) can be coded as marker.

Class Components Summary

get_data(run_nr, split_nr, train_test)Return the train or test data for the given split in the given run. set_window_defs(window_definition[, ...])Code copied from StreamDataset for rewindowing data store(result_dir[, s_format])Stores this collection in the directory result_dir. -

get_data(run_nr, split_nr, train_test)[source]¶ Return the train or test data for the given split in the given run.

Parameters

run_nr: The number of the run whose data should be loaded. split_nr: The number of the split whose data should be loaded. train_test: “train” if the training data should be loaded. “test” if the test data should be loaded.

-

store(result_dir, s_format='pickle')[source]¶ Stores this collection in the directory result_dir.

In contrast to dump this method stores the collection not in a single file but as a whole directory structure with meta information etc. The data sets are stored separately for each run, split, train/test combination.

Parameters

result_dir: The directory in which the collection will be stored.

name: The prefix of the file names in which the individual data sets are stored. The actual file names are determined by appending suffixes that encode run, split, train/test information.

(optional, default: “time_series”)

s_format: The format in which the actual data sets should be stored.

Possible formats are ‘pickle’, ‘text’, ‘csv’ and ‘mat’ (matlab) format. If s_format is a list, the second element further specifies additional options for storing.

- pickle:

- Standard Python format

- text:

- In the text format, all time series objects are concatenated to a single large table containing only integer values.

- csv:

- For the csv format comma separated values are taken as default or a specified Python format string.

- mat:

- Scipy’s savemat function is used for storing. Thereby the data is stored as 3 dimensional array. Also meta data information, like sampling frequency and channel names are saved. As an additional parameter the orientation of the data arrays can be given as ‘channelXtime’ or ‘timeXchannel’

Note

For the text and MATLAB format, markers could be added by using a Marker_To_Mux node before

(optional, default: “pickle”)

TimeSeriesClient¶

-

class

pySPACE.resources.dataset_defs.time_series.TimeSeriesClient(ts_stream, **kwargs)[source]¶ Bases:

pySPACE.missions.support.WindowerInterface.AbstractStreamReaderTimeSeries stream client for TimeSeries

Class Components Summary

__abstractmethods___abc_cache_abc_negative_cache_abc_negative_cache_version_abc_registry_initialize(item)_readmsg([msg_type, verbose])Read time series object from given iterator channelNamesconnect()connect and initialize client dSamplingIntervalmarkerNamesmarkeridsread([nblocks, verbose])Invoke registered callbacks for each incoming data block regcallback(func)Register callback function set_window_defs(window_definitions)Set all markers at which the windows are cut stdblocksize-

__abstractmethods__= frozenset([])¶

-

_abc_cache= <_weakrefset.WeakSet object>¶

-

_abc_negative_cache= <_weakrefset.WeakSet object>¶

-

_abc_negative_cache_version= 33¶

-

_abc_registry= <_weakrefset.WeakSet object>¶

-

dSamplingInterval¶

-

stdblocksize¶

-

markerids¶

-

channelNames¶

-

markerNames¶

-