parameter_optimization¶

Module: missions.nodes.meta.parameter_optimization¶

Determine the optimal parameterization of a subflow

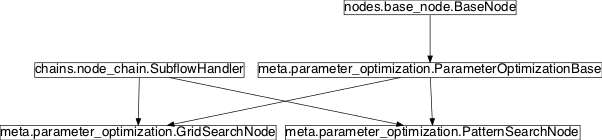

Inheritance diagram for pySPACE.missions.nodes.meta.parameter_optimization:

Class Summary¶

ParameterOptimizationBase(flow_template[, ...]) |

Base class for parameter optimization nodes |

GridSearchNode([ranges, grid]) |

Grid search for optimizing the parameterization of a subflow |

PatternSearchNode([start, directions, ...]) |

Extension of the standard Pattern Search algorithm |

Classes¶

ParameterOptimizationBase¶

-

class

pySPACE.missions.nodes.meta.parameter_optimization.ParameterOptimizationBase(flow_template, variables=[], metric='Balanced_accuracy', std_weight=0, inverse_metric=False, runs=1, nominal_ranges=None, debug=False, validation_parameter_settings={}, final_training_parameter_settings={}, **kwargs)[source]¶ Bases:

pySPACE.missions.nodes.base_node.BaseNodeBase class for parameter optimization nodes

The overall goal is to determine the parameterization of a subflow that maximizes a given metric.

Nodes derived from this class can determine the optimal parameterization (variables) of a subpart of a flow (nodes) fully autonomously. For instance, for different numbers of features retained during feature selection, different complexities/regularization coefficients might be optimal for a classifier. In order to determine which feature-number is optimal, one must choose classifier’ complexity separately for each feature number.

First of all the training data is split into training and validation data e.g. by cross validation (validation_set). Then for a chosen set of parameters the data is processed as described in the specification (nodes). Then the classifier is evaluated (evaluation) using another node, some combination of metrics or something else as for example a derivative in future implementations. So the nodes specification should always include a classifier which is evaluated.

This procedure is maybe repeated until a good set of parameters is found. The algorithm, which defines the way of choosing parameters should determine the node name (e.g. PatternSearchNode) and parameters specifically for the optimization procedure can be passed via the optimization spec. Also, general functions, especially the parts belonging to the evaluation of a parameter are provided in this base class (e.g., function mapping parameter dictionaries to keys and a function to create a grid from given parameters)

When a good parameter is found, the nodes are trained with this parameter on the whole data set.

Note

In future, alternatives can be added, e.g. to combine the different flows of the cross validation with a simple ensemble classifier.

Note

If you want to optimize parameters for each sub-split, this should not be done in this node.

Parameters It is important to mention, that the definition of parameters of this node is structured into main parameters and sub parameters to describe the different aspects of parameter optimization. So take care of indentations.

optimization: As mentioned above this parameter dictionary is used by the specific subclasses, i.e., by the specific optimization algorithms. Hence, see subclasses for documentation of possible parameters.

parallelization: This parameter dictionary is used for parallelization of subflow execution. Possible parameters so far are processing_modality, pool_size and batch_size. See

SubflowHandlerfor more information.validation_set: splits: The number of splits used in an internal cross-validation loop. Note that more splits lead to better estimates of the parametrization’s performance but do also increase computation time considerably.

(recommended, default: 5)

split_node: If no standard CV-splitter with “cv_splits” splits is used, an alternative node is specified by this node in YAML node syntax.

(optional, default: CV_Splitter)

runs: Number of internal runs used for evaluation. Nodes as the CV_Splitter behave different with every run. So we repeat the calculation runs times and have a different randomizer each time. The random seed used in each repetition is:

10 * external_run_number + internal_run_number

The resulting performance measure is calculated with the performances of different (internal) runs and splits. (average - w * standard deviation)

(optional, default: 1)

randomize: Changing cv-splitter with every parameter evaluation step.

Note

Not yet implemented

evaluation: Specification of the sink node and the corresponding evaluation function

performance_sink_node: Specify a different sink node in YAML node syntax. Otherwise the default ‘Classification_Performance_Sink’ will be used with the following parameter ir_class.

(optional, default: Classification_Performance_Sink)

ir_class: The class name (as string) for which IR statistics are computed.

(recommended, default: ‘Target’)

metric: This is the metric that should be maximized. Each metric which is computed by the performance_sink_node can be used, for instance “Balanced_accuracy”, “AUC”, “F_measure” or even soft metrics or loss metrics.

(recommended, default: ‘Balanced_accuracy’)

inverse_metric: If True, metric values are multiplied by -1. In this way, loss metrics can be used for parameter optimization.

(optional, boolean, default: False)

std_weight: Cross validation gives several values for the estimated performance. Therefore we use the difference expected value minus std_weight times standard deviation.

(optional, default: 0)

variables: List of the parameters, to be optimized and replaced in the node_spec.

nodes: The original specification of the nodes that should be optimized. The value of the “nodes” parameter must be a standard NodeChain definition in YAML syntax (properly indented).

nominal_ranges: Similar to the ranges in the grid search, a grid can be specified mainly for nominal parameters. All the other parameters are then optimized dependent on these parameters. Afterwards the resulting performance value is compared to choose the best nominal parameter. When storing the results each nominal parameter is stored

(optional, default: None)

debug: Switch on eventually existing debug messages

(optional, default: False)

validation_parameter_settings: Dictionary, of parameter mappings to be replaced in the nodes in the validation phase of the parameter optimization.

This works together with the final_training_parameter_settings.

(optional, default: dict())

final_training_parameter_settings: Dictionary, of parameter mappings to be replaced in the nodes after the validation phase of the parameter optimization in the final training phase.

This works together with the validation_parameter_settings.

A very important use case of these parameters is to switch of retrain mode in validation phase but nevertheless have it active in the final subflow or node.

(optional, default: dict())

Author: Mario Krell (mario.krell@dfki.de) Created: 2011/08/03 LastChange: 2012/09/03 Anett Seeland - new structure for parallelization Class Components Summary

_execute(data)Execute the flow on the given data vector data _get_flow()Method introduced for consistency with flow_node _inc_train(data[, class_label])Iterate through the nodes to train them _stop_training()Do the optimization step and define final parameter choice _train(data, label)Train the flow on the given data vector data check_parameters(param_spec)Check input parameters of existence and appropriateness get_best_dict_entry(performance_dict)Find the highest performance value in the dictionary get_best_parametrization()Apply optimization algorithm get_output_type(input_type[, as_string])Returns the output type of the entire flow get_previous_transformations([sample])Recursively construct a list of (linear) transformations get_sensor_ranking()Get the sensor ranking from the optimized trained flow is_retrainable()Retraining if one node in subflow is retrainable is_supervised()Return whether this node requires supervised training is_trainable()Return whether this node is trainable p2key(parameters)Map parameter dictionary to hashable tuple (key for dictionary) prepare_optimization()Initialize optimization procedure present_label(label)Forward the label to the subflow re_init()Reset optimization params search_grid(parameter_ranges)Combine each parameter in parameter ranges to a grid via cross product store_state(result_dir[, index])Store this node in the given directory result_dir -

__init__(flow_template, variables=[], metric='Balanced_accuracy', std_weight=0, inverse_metric=False, runs=1, nominal_ranges=None, debug=False, validation_parameter_settings={}, final_training_parameter_settings={}, **kwargs)[source]¶

-

static

check_parameters(param_spec)[source]¶ Check input parameters of existence and appropriateness

-

_stop_training()[source]¶ Do the optimization step and define final parameter choice

This is the main method of this node!

-

_execute(data)[source]¶ Execute the flow on the given data vector data

This method is used in offline mode and for delivering the training data for the next node. In the other case, request_data_for_testing is used.

-

_get_flow()[source]¶ Method introduced for consistency with flow_node

This node itself is no real flow_node, since the final flow is unknown during initialization, but specified during the optimization process.

-

_inc_train(data, class_label=None)[source]¶ Iterate through the nodes to train them

The optimal parameter remains fixed and then the nodes in the optimal flow get the incremental training.

Here it is important to know, that first the node is changed and then the changed data is forwarded to the next node. This is different to the normal offline retraining scheme.

-

present_label(label)[source]¶ Forward the label to the subflow

buffering must be set to True only for the main node for incremental learning in application (live environment). The inner nodes must not have set this parameter.

-

get_previous_transformations(sample=None)[source]¶ Recursively construct a list of (linear) transformations

Overwrites BaseNode function, since meta node needs to get transformations from subflow.

-

re_init()[source]¶ Reset optimization params

Subclasses can overwrite this method if necessary, e.g. in case some parameters have to be reinitialized if several optimizations are done

-

prepare_optimization()[source]¶ Initialize optimization procedure

Subclasses can overwrite this method if necessary.

-

get_best_parametrization()[source]¶ Apply optimization algorithm

This method has to be implemented in the subclass.

-

GridSearchNode¶

-

class

pySPACE.missions.nodes.meta.parameter_optimization.GridSearchNode(ranges=None, grid=None, *args, **kwargs)[source]¶ Bases:

pySPACE.missions.nodes.meta.parameter_optimization.ParameterOptimizationBase,pySPACE.environments.chains.node_chain.SubflowHandlerGrid search for optimizing the parameterization of a subflow

For each parameter a list of parameters is specified (ranges). The crossproduct of all values in ranges is computed and a subflow is evaluated for each of this parameterizations using cross-validation on the training data and finally the best point in the search grid is chosen as optimal point.

Parameters This algorithms does not need the variables parameter, since it is also included in the ranges parameter.

ranges: A dictionary mapping parameters to the values they should be tested for. If more than one parameter is given, the crossproduct of all parameter values is computed (i.e. each combination). For each resulting parameter combination, the flow specified in the YAML syntax is evaluated. The parameter names should be used somewhere in this YAML definition and should be unique since the instantiation is based on pure textual replacement. It is common to enforce this by starting and ending the parameter names by “~~”. In the example below, the two parameters are called “~~OUTLIERS~~” and “~~COMPLEXITY~~”, each having 3 values. This results in 9 parameter combinations to be tested.

grid: From the ranges parameter a grid is generated. As a replacement, the grid can be specified directly using this parameter. Therefore, a list of dictionaries is used.

For example a ranges parametrization like ‘{~~OUTLIERS~~ : [0, 5, 10], ~~COMPLEXITY~~ : [0.01, 0.1, 1.0]}’ could be transferred to:

- ~~OUTLIERS~~ : 0 ~~COMPLEXITY~~ : 0.01 - ~~OUTLIERS~~ : 0 ~~COMPLEXITY~~ : 0.1 - ~~OUTLIERS~~ : 0 ~~COMPLEXITY~~ : 1 - ~~OUTLIERS~~ : 5 ~~COMPLEXITY~~ : 0.01 - ~~OUTLIERS~~ : 5 ~~COMPLEXITY~~ : 0.1 - ~~OUTLIERS~~ : 5 ~~COMPLEXITY~~ : 1 - ~~OUTLIERS~~ : 10 ~~COMPLEXITY~~ : 0.01 - ~~OUTLIERS~~ : 10 ~~COMPLEXITY~~ : 0.1 - ~~OUTLIERS~~ : 10 ~~COMPLEXITY~~ : 1

Exemplary Call

- node : Grid_Search parameters : optimization: ranges : {~~OUTLIERS~~ : [0, 5, 10], ~~COMPLEXITY~~ : [0.01, 0.1, 1.0]} parallelization: processing_modality : 'backend' pool_size : 2 validation_set : split_node : node : CV_Splitter parameters : splits : 10 stratified : True time_dependent : True evaluation: metric : "Balanced_accuracy" std_weight: 1 performance_sink_node : node : Sliding_Window_Performance_Sink parameters : ir_class : "Movement" classes_names : ['NoMovement','Movement'] uncertain_area : 'eval([(-600,-350)])' calc_soft_metrics : True save_score_plot : True variables: [~~OUTLIERS~~, ~~COMPLEXITY~~] nodes : - node : Feature_Normalization parameters : outlier_percentage : ~~OUTLIERS~~ - node: LibSVM_Classifier parameters : complexity : ~~COMPLEXITY~~ class_labels : ['NoMovement', 'Movement'] weight : [1.0, 2.0] kernel_type : 'LINEAR'

POSSIBLE NODE NAMES: - GridSearchNode

- Grid_Search

- GridSearch

POSSIBLE INPUT TYPES: - PredictionVector

- FeatureVector

- TimeSeries

Class Components Summary

get_best_parametrization()Evaluate each flow-parameterization by running a cross validation input_typesnode_from_yaml(node_spec)Create the node based on the node_spec -

get_best_parametrization()[source]¶ Evaluate each flow-parameterization by running a cross validation on the training data for grid search

-

input_types= ['PredictionVector', 'FeatureVector', 'TimeSeries']¶

PatternSearchNode¶

-

class

pySPACE.missions.nodes.meta.parameter_optimization.PatternSearchNode(start=[], directions=[], start_step_size=1.0, stop_step_size=1e-10, scaling_factor=0.5, up_scaling_factor=1, max_iter=inf, max_bound=[], min_bound=[], red_pars=[], **kwargs)[source]¶ Bases:

pySPACE.missions.nodes.meta.parameter_optimization.ParameterOptimizationBase,pySPACE.environments.chains.node_chain.SubflowHandlerExtension of the standard Pattern Search algorithm

For main principle see: Numerical Optimization, Jorge Nocedal & Stephen J. Wright

Special Components

- No double calculation of already visited points

- Step size increased, when better point is found, to speed up search

- Possible limit on iteration steps to be comparable to grid search

- cross validation cycle inside

Parameters

The following parameters have to be specified in the optimization spec. For the Algorithm the thereof variables parameter is important since it gives the order of parameters to simplify the specification of vectors, corresponding to point, directions or bounds. They can be specified as dictionaries, lists or tuple and they are transformed internally to array with the method get_vector. The transformation back to keys for filling them in in the node chains is done by v2d.

start: Starting point of the algorithm. For SVM optimization, the complexity has to be sufficiently small.

(recommended, default: ones(dimension))

directions: List of directions, being evaluated around current best point.

(optional, default: unit directions)

start_step_size: First value to scale the direction vectors

(optional, default: 1.0)

stop_step_size: Minimal value to scale the direction vectors

If the step size gets lower, the algorithm stops.

(optional, default: 1e-10)

scaling_factor: When evaluations does not deliver a better point, the current scaling of the directions is reduced by the scaling factor. Otherwise it is increased by the up_scaling_factor.

(optional, default: 0.5)

up_scaling_factor: If the evaluation gives a better point, the step size is increased by this factor. In the default, there is no up scaling.

(optional, default: 1)

max_iter: If the total number of evaluation of the directions exceeds this value, the algorithm also stops.

(optional, default: infinity)

max_bound: Points exceeding this bounds are not evaluated.

(optional, default: inf*array(ones(dimension)))

min_bound: Undershooting points are not evaluated.

(optional, default: -inf*array(ones(dimension)))

Exemplary Call

- node : Pattern_Search parameters : parallelization : processing_modality : 'local' pool_size : 4 optimization: start_step_size : 0.002 start : [0.005,0.01] directions : [[-1,-1],[1,1],[1,-1],[-1,1]] stop_step_size : 0.00001 scaling_factor : 0.25 min_bound : [0,0] max_bound : [10,10] max_iter : 100 validation_set : split_node : node : CV_Splitter parameters : splits : 5 runs : 2 evaluation: metric : "Balanced_accuracy" std_weight: 1 ir_class : "Target" variables: [~~W1~~, ~~W2~~] nodes : - node: LibSVM_Classifier parameters : complexity : 1 class_labels : ['Standard', 'Target'] weight : [~~W1~~, ~~W2~~] kernel_type : 'LINEAR'

POSSIBLE NODE NAMES: - PatternSearch

- PatternSearchNode

- Pattern_Search

POSSIBLE INPUT TYPES: - PredictionVector

- FeatureVector

- TimeSeries

Class Components Summary

get_best_parametrization()Perform pattern search get_vector(v_spec)Transform list, tuple or dict to an array/vector input_typesnode_from_yaml(node_spec)Create the node based on the node_spec prepare_optimization()Calculate initial performance value ps_step()Single descent step of the pattern search algorithm re_init()Reset search for optimum v2d(v)Transform vector to dictionary using self.variables -

__init__(start=[], directions=[], start_step_size=1.0, stop_step_size=1e-10, scaling_factor=0.5, up_scaling_factor=1, max_iter=inf, max_bound=[], min_bound=[], red_pars=[], **kwargs)[source]¶

-

get_best_parametrization()[source]¶ Perform pattern search

Evaluate set of directions around current best solution. If better solution is found start new evaluation of directions. Otherwise, reduce length of directions by scaling factor

-

input_types= ['PredictionVector', 'FeatureVector', 'TimeSeries']¶