templates¶

Module: missions.nodes.templates¶

Tell the developer about general coding and documentation approaches for nodes

A very useful tutorial can be found under Writing new nodes.

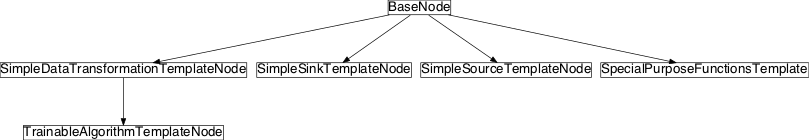

Inheritance diagram for pySPACE.missions.nodes.templates:

Class Summary¶

SimpleDataTransformationTemplateNode([...]) |

Parametrized algorithm, transforming the data without training |

TrainableAlgorithmTemplateNode([Parameter1, ...]) |

Template for trainable algorithms |

SpecialPurposeFunctionsTemplate([store, ...]) |

Introduce additional available functions |

SimpleSourceTemplateNode(\*\*kwargs) |

A simple template that illustrates the basic principles of a source node |

SimpleSinkTemplateNode([...]) |

A simple template that illustrates the basic principles of a sink node |

Classes¶

SimpleDataTransformationTemplateNode¶

-

class

pySPACE.missions.nodes.templates.SimpleDataTransformationTemplateNode(Parameter1=42, Parameter2=False, **kwargs)[source]¶ Bases:

pySPACE.missions.nodes.base_node.BaseNodeParametrized algorithm, transforming the data without training

Describe your algorithm in detail.

In the simplest case, an algorithm only implements its initialization and execution function like this node.

The list of parameters should always be complete and correct to avoid hidden functionality.

References

If this node is using code from other implementations or is described in detail in a publication, mention the reference here.- Parameters

Parameter1: Describe effect and specialties

(recommended, default: 42)

Parameter2: Describe the effect, and if something special happens by default. It is also important to mention, which entries are possible (e.g. only True and False are accepted values).

(optional, default: False)

Exemplary Call

- node : SimpleDataTransformationTemplate parameters: Parameter1 : 77 Parameter2 : False

Input: Type1 (e.g. FeatureVector) Output: Type2 (e.g. FeatureVector) Author: Mario Muster (muster@informatik.exelent-university.de) Created: 2013/02/25 POSSIBLE NODE NAMES: POSSIBLE INPUT TYPES: Class Components Summary

_execute(x)General description of algorithm maybe followed by further details -

__init__(Parameter1=42, Parameter2=False, **kwargs)[source]¶ Set the basic parameters special for this algorithm

If your init is not doing anything special, it does not need any documentation. The relevant class documentation is expected to be in the class docstring.

Note

The mapping from the call of the function with a YAML file and this init is totally straightforward. Every parameter in the dictionary description in the YAML file is directly used at the init call. The value of the parameter is transformed with the help of the YAML syntax (see: Using YAML Syntax in pySPACE).

It is important to also use **kwargs, because they have to be forwarded to the base class, using:

super(SimpleDataTransformationTemplateNode, self).__init__(**kwargs)

Warning

With the call of super comes some hidden functionality. Every self parameter in the init is made permanent via the function:

set_permanent_attributes()from the base node. Normally all self parameters are instantiated after this call and have to be made permanent on their own. Permanent means, that these parameters are reset to the defined value, when thereset()method is called. This is for example done during k-fold cross validation, when the training fold is changed. For special variable types you may run into trouble, because set_permanent_attributes needs to copy them.Warning

The init function is called before the distribution of node_chains in the parallel execution. So the node parameters need to be able to be stored into the pickle format. If you need parameters, which have not this functionality, just initialize them with the first call of the training or execute method.

self.set_permanent_attributes( P1 : Parameter1, P2 : Parameter2, P3 : "Hello" )

Here self.P3 will be an internal parameter.

-

_execute(x)[source]¶ General description of algorithm maybe followed by further details

E.g. log “Hello” during first call and if P2 is set to True, always multiply data with P1 and in the other case forward the data.

Logging is done using

_log():self._log(self.P3, level=logging.DEBUG)

To access only the data array and not the attached meta data, use data = x.view(numpy.ndarray) for preparation.

TrainableAlgorithmTemplateNode¶

-

class

pySPACE.missions.nodes.templates.TrainableAlgorithmTemplateNode(Parameter1=42, Parameter2=False, **kwargs)[source]¶ Bases:

pySPACE.missions.nodes.templates.SimpleDataTransformationTemplateNodeTemplate for trainable algorithms

SimpleDataTransformationTemplateNodeis the base node for this node and so, this node does not have to implement an _execute or __init__ function. Often these methods have to be implemented nevertheless, but not here, to keep the example short.For trainable methods, a minimum of two functions has to be implemented:

is_trainable()and_train(). Optionally four other functions can be overwritten:is_supervised(),_stop_training(),_inc_train()andstart_retraining.()The first returns by default False and the other methods do nothing.Note

The execute function is applied on all data, even the training data, but the true label remains unknown.

- Parameters

Please refer to

SimpleDataTransformationTemplateNodeNote

Parameter1 is determined, by counting the training examples.

Exemplary Call

- node : TrainableAlgorithmTemplateNode parameters: Parameter1 : 77 Parameter2 : False

Input: Type1 (e.g. FeatureVector) Output: Type2 (e.g. FeatureVector) Author: Mario Muster (muster@informatik.exelent-university.de) Created: 2013/02/25 POSSIBLE NODE NAMES: POSSIBLE INPUT TYPES: Class Components Summary

_inc_train(data, class_label)Train on new examples in testing phase _stop_training()Called after processing of all training examples _train(data, class_label)Called for each element in training data to be processed is_supervised()Return True to get access to labels in training functions is_trainable()Define trainable node, by returning True in this function start_retraining()Prepare retraining -

_train(data, class_label)[source]¶ Called for each element in training data to be processed

Incremental algorithms, simply use the example to change their parameters and batch algorithms preprocess data and only store it.

If

is_supervised()were not overwritten or set False, this function is defined without the parameter class_label

-

_stop_training()[source]¶ Called after processing of all training examples

For simplicity, we just reimplement the default.

-

_inc_train(data, class_label)[source]¶ Train on new examples in testing phase

During testing phase in the application phase, new labeled examples may occur and this function is used to improve the already trained algorithm on these examples.

Note

This method should always be as fast as possible.

For simplicity, we only forward everything to

_train().For more details on retraining (how to turn it on, and how it works), have a look at the documentation of the retrain parameter in the

BaseNode.

-

start_retraining()[source]¶ Prepare retraining

Normally this method is not needed and does nothing, but maybe some parameters have to be changed, before the first retraining with the _inc_train method should be done. This method is here, to give this possibility.

In our case, we simply reset the starting parameter self.P3.

SpecialPurposeFunctionsTemplate¶

-

class

pySPACE.missions.nodes.templates.SpecialPurposeFunctionsTemplate(store=False, retrain=False, input_dim=None, output_dim=None, dtype=None, kwargs_warning=True, **kwargs)[source]¶ Bases:

pySPACE.missions.nodes.base_node.BaseNodeIntroduce additional available functions

Additional to the aforementioned methods, some algorithms have to overwrite the default behavior of nodes, directly change the normal data flow, manipulate data or labels, or communicate information to other nodes.

Some of these methods will be introduced in the following and some use cases will be given.

Warning

Every method in the

BaseNodecould be overwritten but this should be done very carefully to avoid bad side effects.Class Components Summary

get_result_dataset()Implementing this function, makes a node a sinkget_sensor_ranking()Return sensor ranking fitting to the algorithm process_current_split()Main processing part on test and training data of current split request_data_for_testing()Returns data for testing of subsequent nodes of the node chain request_data_for_training(use_test_data)Returns generator for training data for subsequent nodes of the node chain reset()Resets the node to a clean state store_state(result_dir[, index])Store some additional results or information of this node -

store_state(result_dir, index=None)[source]¶ Store some additional results or information of this node

Here the parameter self.store should be used to switch on the saving, since this method is called in every case, but should only store information, if this parameter is set true

This method is automatically called during benchmarking for every node. It is for example used to store visualization of algorithms or data.

Additionally to the result_dir, the node name should be used. If you expect this node to occur multiple times in a node chain, also use the index. This can be done for example like:

import os from pySPACE.tools.filesystem import create_directory if self.store: #set the specific directory for this particular node node_dir = os.path.join(result_dir, self.__class__.__name__) #do we have an index-number? if index is None: #add the index-number... node_dir += "_%d" % int(index) create_directory(node_dir)

Furthermore, it is very important to integrate the split number into the file name when storing, because otherwise your results will be overwritten. The convention in pySPACE is, to have a meaningful name of the part of the node you store followed by an underscore and ‘sp’ and the split number as done in

file_name = "%s_sp%s.pickle" % ("patterns", self.current_split)

-

reset()[source]¶ Resets the node to a clean state

Every parameter set with

set_permanent_attributes()is by default reset here to its specified value or deleted, if no value is specified.Since this method copies every parameter or some variables escape from the normal class variables scope, some methods need to overwrite this method.

When you really need to overwrite this method some points have to be considered. For the normal functionality of the node, the super method needs to be called. To avoid deleting of the special variables, they have to be made local variables beforehand and afterwards again cast to class variables. This is depicted in the following example code, taken from the

SameInputLayerNode.def reset(self): ''' Also reset internal nodes ''' nodes = self.nodes for node in nodes: node.reset() super(SameInputLayerNode, self).reset() self.nodes = nodes

-

request_data_for_training(use_test_data)[source]¶ Returns generator for training data for subsequent nodes of the node chain

If use_test_data is true, all available data is used for training, otherwise only the data that is explicitly for training.

These methods normally use the

MemoizeGeneratorto define their generator. When implementing such a method, one should always try not to double data but only redirect it, without extra storing it.The definition or redefinition of training data is done by

sourceandsplitternodes.

-

request_data_for_testing()[source]¶ Returns data for testing of subsequent nodes of the node chain

When defining

request_data_for_training()this method normally has to be implemented/overwritten, too and vice versa.

-

process_current_split()[source]¶ Main processing part on test and training data of current split

This method is called in the usage with benchmark node chains and defines the gathering of the result data of the node chain for a

sinknode.Hereby it gets the data by calling

request_data_for_training()andrequest_data_for_testing().In the case of using the

CrossValidationSplitterNode, this method is called multiple times for each split and stores every time the result in the result dataset separately.Though this approach seems on first sight very complicated on first sight, it gives three very strong advantages.

- The cross validation can be done exactly before the first trainable node in the node chain and circumvents unnecessary double processing.

- By handling indices instead of real data, the data for training and testing is not copied and memory is saved.

- The cross validation is very easy to use.

Moving this functionality to the

dataset_typeswould make the usage muh mor complicated and inefficient. Especially for nodes, which internally use node chains, like theparameter_optimizationnodes, this easy access pays off.

-

get_sensor_ranking()[source]¶ Return sensor ranking fitting to the algorithm

For usage with the ranking variant in the

SensorSelectionRankingNodethis method of the node is called to get the ranking to reduce sensors.The ranking is a sorted list of tuple (sensor name, weight). The first element has to correspond to the sensor with the lowest weight, meaning it is the most unimportant.

Note

The code here is a copy from

basewhich takes the classification vector self.features and sums up the absolute values fitting to one channel. It is only used as an example.

-

SimpleSourceTemplateNode¶

-

class

pySPACE.missions.nodes.templates.SimpleSourceTemplateNode(**kwargs)[source]¶ Bases:

pySPACE.missions.nodes.base_node.BaseNodeA simple template that illustrates the basic principles of a source node

In pySPACE, source nodes are used at the beginning of the node chain. The source nodes are responsible for the input of data, be it from a static source or from a live stream.

It is very important to note that these nodes just serve the purpose of providing the node chain with an input dataset and do not perform any changes on the data itself. That being said, these nodes are do not have an input node and are not trainable!

In the following we will discuss the general strategy for building a new source node for a static input data set which has been saved to disk. In the case of more complicated inputs, please consult the documentation of

ExternalGeneratorSourceNodeandStream2TimeSeriesSourceNodePOSSIBLE NODE NAMES: POSSIBLE INPUT TYPES: Class Components Summary

getMetadata(key)Return the value corresponding to the given key from the dataset meta data of this source node request_data_for_testing()Returns the data that can be used for testing of subsequent nodes request_data_for_training(use_test_data)Returns the data that can be used for training of subsequent nodes set_input_dataset(dataset)Sets the dataset from which this node reads the data use_next_split()Return False -

__init__(**kwargs)[source]¶ Initialize some values to 0 or None

The initialization routine of the source node is basically completely empty. Should you feel the need to do something in this part of the code, you can initialize the

input_datasettoNone. This attribute will then later be changed when theset_input_datasetmethod is called.If the user wants to generate the dataset inside the SourceNode, this should be done in the

__init__method though. A good example of this practice can be found in theRandomTimeSeriesSourceNode

-

set_input_dataset(dataset)[source]¶ Sets the dataset from which this node reads the data

This method is the beginning of the node. Put simply, this method starts the feeding process of your node chain by telling the node chain where to get the data from.

-

request_data_for_training(use_test_data)[source]¶ Returns the data that can be used for training of subsequent nodes

This method streams training data and sends it to the subsequent nodes. If one looks at the tutorial related to building new nodes (available in the tutorial section), one can see exactly where the

request_datamethods are put to use.The following example is one that was extracted from the

FeatureVectorSourceNodewhich should(in theory at least) be implementable for all types of data.

-

request_data_for_testing()[source]¶ Returns the data that can be used for testing of subsequent nodes

The principle of obtaining the testing data are the same as the principles used in obtaining the training data set. The only difference here is that, in the case in which there is no testing data available, we allow for the training data to be used as testing data.

-

SimpleSinkTemplateNode¶

-

class

pySPACE.missions.nodes.templates.SimpleSinkTemplateNode(selection_criterion=None, data=None, **kwargs)[source]¶ Bases:

pySPACE.missions.nodes.base_node.BaseNodeA simple template that illustrates the basic principles of a sink node

The sink node is always placed at the end of the node chain. You can think of a sink node as a place in which you can throw all your data and it will do something with this data e.g. saving it to disk.

Of course, this is not the only possibility for a Sink node but it is the most basic one. One example of a more complex process happening inside the Sink node is that of the

PerformanceSinkNodewhereby the classification results are collected into a complex structure that reflects the performance of the entire node chain.That being said, this template addresses the very simple case of just collecting the results of the node chain and doing something with them.

For a complete list of the available nodes, please consult

sinkPOSSIBLE NODE NAMES: POSSIBLE INPUT TYPES: Class Components Summary

_train(data, label)Tell the node what to do with specific data inputs get_result_dataset()Return the result dataset is_supervised()Returns True if the node requires supervised training is_trainable()Return True if the node is trainable process_current_split()The final processing step for the current split reset()Reset the permanent parameters of the node chain -

__init__(selection_criterion=None, data=None, **kwargs)[source]¶ Initialize some criterion of selection for the data

In the initialization stage, the node is expected to just save some permanent attributes that it might use at a later point in time. In the case of

FeatureVectordata, this criterion might represent selected channel names(as implemented inFeatureVectorSinkNodewhile forTimeSeriesit might represent a sorting criterion, as implemented inTimeSeriesSinkNodeSince this is only a mere template, we will call our selection criterion selection_criterion and leave it up to the user to implement specific selection criteria.

-

is_trainable()[source]¶ Return True if the node is trainable

While the sink nodes do not need to be trained, they do need access to the training data that is sent through the node chain. In order to achieve this, the

is_trainable()function from the BaseNode is overwritten such that it always returns True when access to the training data is required.

-

is_supervised()[source]¶ Returns True if the node requires supervised training

The function will almost always return True. If the node requires access to the training data i.e. if the node is_trainable it will almost surely also be supervised.

-

_train(data, label)[source]¶ Tell the node what to do with specific data inputs

In the case of Sink nodes, the _train function is usually overwritten with a dummy function that either returns the input data e.g.

AnalyzerSinkNodeor just does not(as we will implement it here)

-

reset()[source]¶ Reset the permanent parameters of the node chain

When used inside a node chain, the Sink node should also be responsible for saving the permanent state parameters. These parameters get reinitialized whenever the node chain reaches its end. Nevertheless, the parameters should be saved such that they can be inspected after the entire procedure has finished.

The following piece of code was adapted from

FeatureVectorSinkNodewith the FeatureVector specific parameters changed to dummy variables.

-